Hi,

I have managed to follow the instructions you posted and replicate your examples with the LED and scale force sensor. I am interested in connecting 2 servos to the yun and try to generate the robot movement that you showcased in your video.

How have you generated robot movement using the Yun? I am pretty familiar with programming an Arduino Uno powered robot with a Parallax shield that has a 5V voltage regulator.

Can you provide some suggestions/resources based on your experience?

thanks

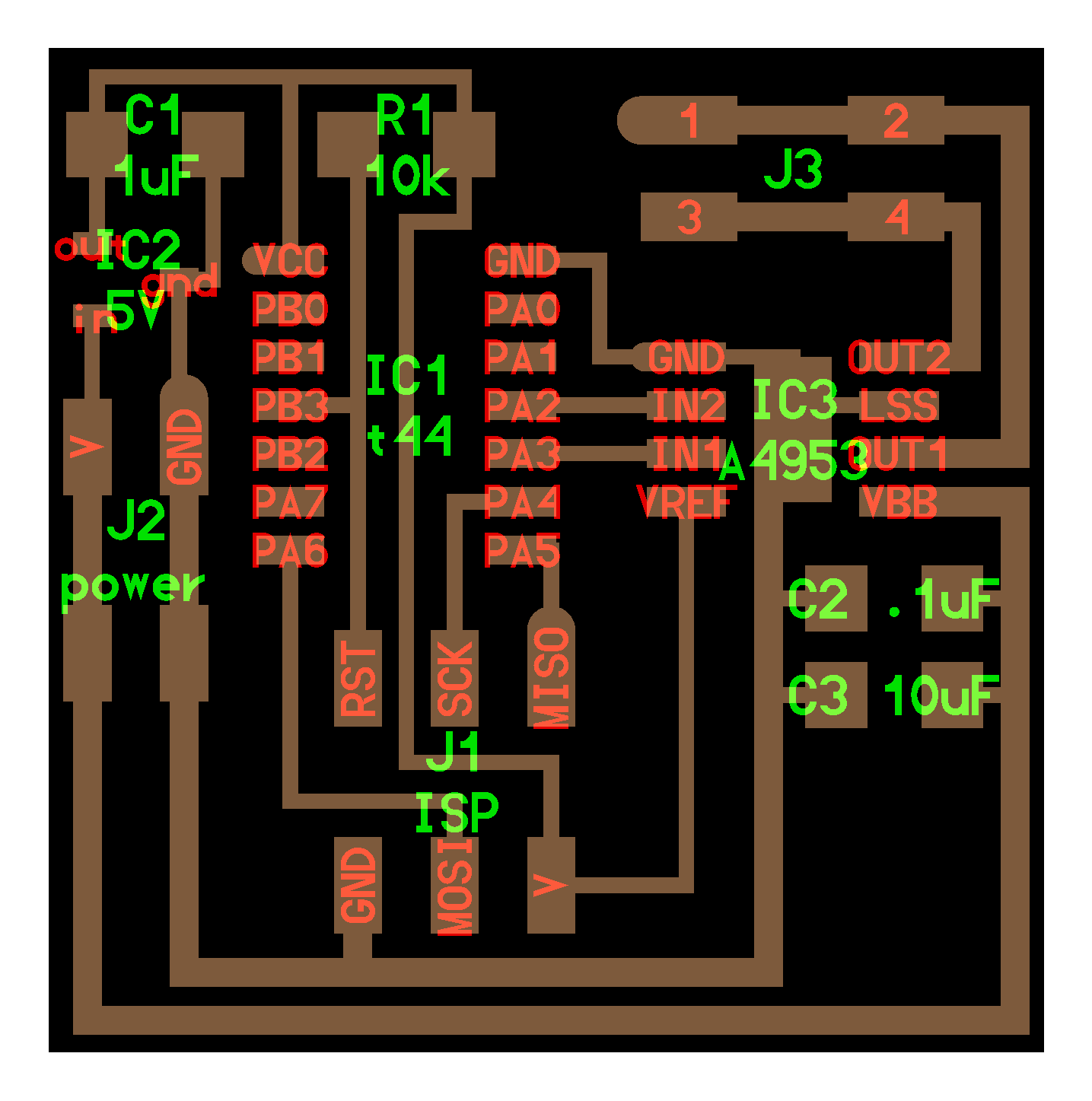

You will need to use an h-bridge to power the motors. Something like this here:

http://www.digikey.com/product-detail/en/A4953ELJTR-T/620-1428-1-ND/2765622

The MIT CBA has a circuit for it:

The H-bridge allows you to move a motor in two directions.

The motor can have a different voltage then your arduino yun.

You can find more interesting output possibilities from the MIT Media Lab and CBA class called “How to Make almost Anything” here: http://academy.cba.mit.edu/classes/output_devices/index.html

If the CBA example does not match, a tutorial for another component that runs with 5 Volt for the motor can be found here:

http://itp.nyu.edu/physcomp/labs/motors-and-transistors/dc-motor-control-using-an-h-bridge/

WATCH OUT: The Yun only runs internally with 3.3 volt! Never give it more.

However, you can power it with via the USB connector with regulated 5 volts.

Double check and test your circuits.

You can learn more about the Arduino Yun here:

1 Like

OK, thanks. I will have to get an the H-Bridge but also find a way to control the robot with no USB connector and maybe getting a voltage regulator will help here.

One of my long term goal here is to experiment if I can constrain the robot to move within a predefined area that I draw in the digital app.

Can you also share how you approached the robot movement in the case of Lego? Did you use Arduino code for Lego EV3 as well?

thanks again

You will need to have big markers visible at all time while you are drawing.

I will add a switch in to the Editor for the extended tracking that vuforia offers.

That should give you more flexibility, as it tracks your marker as origin, even when the marker is not visible.

It will take some time for the App Store to review…

Eventually, once the HoloLens or Project Tango become widely available in consumer goods we should be able to reliably sense the visualization device in space. Then your example will be much better to realize.

We had many experiments to make better use of the iphone/ipad build in gyroscope and motion sensors in order to support scenarios like yours. The gyroscope works fine for rotations, but the motion sensor is no use for stabilizing translation movement.

I have added the extended tracking to the editor.

It will become available in the App Store with version 0.1.2. currently waiting for review in the AppStore.

It took a moment longer. But we have a new update.

It supports extended tracking and allows you to customize the entire Reality Editor experience.

It also allows you to hide the UI in case you want to record a nice looking demo.

1 Like

I would suggest getting the micro-controller of Adruino manufacturer from a cheaper product place.